Introducing The straightforward Option to Deepseek China Ai

페이지 정보

본문

The Qwen and LLaMA variations are explicit distilled fashions that combine with DeepSeek and can serve as foundational models for high quality-tuning utilizing DeepSeek’s RL methods. Not solely that, StarCoder has outperformed open code LLMs like the one powering earlier versions of GitHub Copilot. The open source mannequin is hosted fully independent of China. After each GPU has accomplished a ahead and backward go, gradients are accumulated across GPUs for a world mannequin replace. Within the face of disruptive applied sciences, moats created by closed source are momentary. The models are accessible for native deployment, with detailed directions supplied for users to run them on their methods. Can be run utterly offline. The local model you may obtain known as DeepSeek-V3, which is part of the DeepSeek R1 collection fashions. Tom's Guide not too long ago pitted Free DeepSeek Ai Chat towards ChatGPT with a sequence of prompts, and in almost all seven prompts, DeepSeek offered a greater reply. "We introduce an innovative methodology to distill reasoning capabilities from the lengthy-Chain-of-Thought (CoT) mannequin, specifically from one of the DeepSeek R1 series models, into commonplace LLMs, particularly DeepSeek-V3. Multiple reasoning modes can be found, together with "Pro Search" for detailed answers and "Chain of Thought" for transparent reasoning steps. Below are particulars of each of them.

The Qwen and LLaMA variations are explicit distilled fashions that combine with DeepSeek and can serve as foundational models for high quality-tuning utilizing DeepSeek’s RL methods. Not solely that, StarCoder has outperformed open code LLMs like the one powering earlier versions of GitHub Copilot. The open source mannequin is hosted fully independent of China. After each GPU has accomplished a ahead and backward go, gradients are accumulated across GPUs for a world mannequin replace. Within the face of disruptive applied sciences, moats created by closed source are momentary. The models are accessible for native deployment, with detailed directions supplied for users to run them on their methods. Can be run utterly offline. The local model you may obtain known as DeepSeek-V3, which is part of the DeepSeek R1 collection fashions. Tom's Guide not too long ago pitted Free DeepSeek Ai Chat towards ChatGPT with a sequence of prompts, and in almost all seven prompts, DeepSeek offered a greater reply. "We introduce an innovative methodology to distill reasoning capabilities from the lengthy-Chain-of-Thought (CoT) mannequin, specifically from one of the DeepSeek R1 series models, into commonplace LLMs, particularly DeepSeek-V3. Multiple reasoning modes can be found, together with "Pro Search" for detailed answers and "Chain of Thought" for transparent reasoning steps. Below are particulars of each of them.

Also called Generative AI, individuals are learning how powerfully these chatbots can aid you with a wide range of duties, similar to answering questions, providing info, scheduling appointments, and even ordering services or products. This new method effectively accounts for data from the lengthy tails of distributions, enhancing the performance of algorithms in Self-Supervised Learning. The distilled fashions are fine-tuned based mostly on open-supply models like Qwen2.5 and Llama3 collection, enhancing their efficiency in reasoning tasks. Tech giants are speeding to build out massive AI data centers, with plans for some to make use of as much electricity as small cities. "DeepSeek on Perplexity is hosted in ????????US/????????EU data centers - your information never leaves Western servers. "DeepSeek online R1 is now obtainable on Perplexity to assist deep internet research. And don’t miss Dave’s weekly deep dive, Breaking Analysis, out this weekend. One factor to remember earlier than dropping ChatGPT for DeepSeek is that you won't have the flexibility to upload images for evaluation, generate images or use some of the breakout instruments like Canvas that set ChatGPT apart. Up to now, conventional industries in China have struggled with the rise in labor prices as a result of growing aging population in China and the low start price.

When the United States blocked China from accessing satellite navigation expertise, China developed BeiDou, its homegrown various to the global Positioning System (GPS). ChatGPT maker OpenAI, and was extra value-efficient in its use of expensive Nvidia chips to train the system on enormous troves of knowledge. The crew introduced cold-start information earlier than RL, leading to the event of DeepSeek-R1. DeepSeek-R1 employs a Mixture-of-Experts (MoE) design with 671 billion complete parameters, of which 37 billion are activated for every token. In addition to performance, Chinese companies are challenging their US opponents on worth. Working with experienced AI development companies might help companies effectively combine these highly effective LLMs into their operations. With Cascade, you can rapidly construct SaaS purposes effectively. It is open-sourced and fantastic-tunable for particular enterprise domains, extra tailored for business and enterprise applications. IBM open-sourced new AI models to speed up materials discovery with purposes in chip fabrication, clean vitality, and shopper packaging. As today’s AI developers mature and as AI disperses into purposes, the historical lesson stays essential: Unchecked consolidation of energy stifles the innovation obligatory for economic development, national security, and client safety. The Dutch Data Protection Authority had also earlier urged residents to use the app with warning.

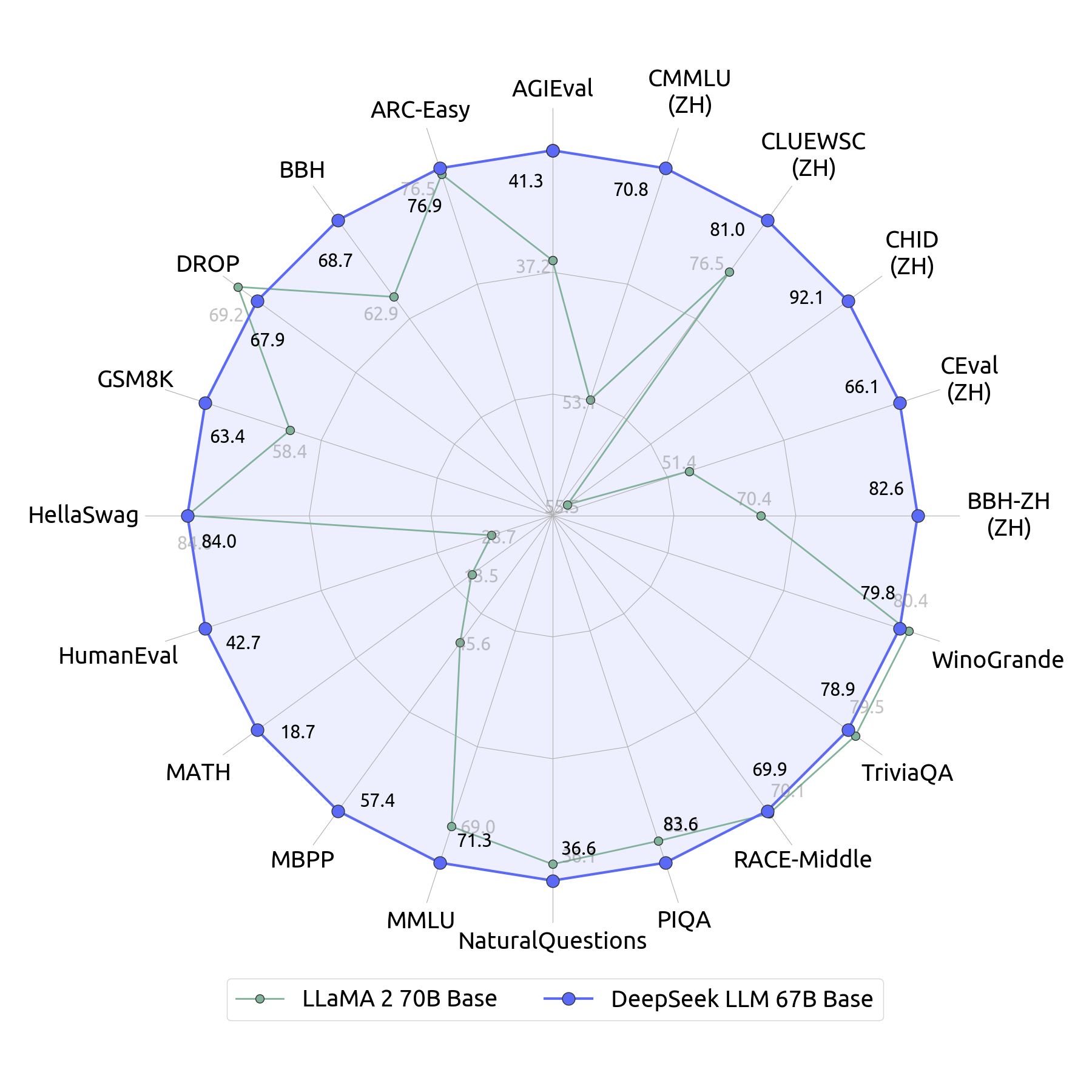

MIT researchers have developed Heterogeneous Pretrained Transformers (HPT), a novel mannequin structure impressed by massive language fashions, designed to train adaptable robots by using knowledge from multiple domains and modalities. MMLU is used to check for a number of tutorial and professional domains. Its goal is to democratize access to advanced AI research by offering open and environment friendly models for the academic and developer neighborhood. More oriented for tutorial and open research. A key open question will be the extent to which the standard of chains-of-thought becoming vital for enter datasets for these fashions - s1 relies off of refined chains of thought from Google Gemini, and DeepSeek is broadly thought to have educated in part on some chains of thought derived from OpenAI o1 mannequin. The group then distilled the reasoning patterns of the bigger mannequin into smaller models, leading to enhanced efficiency. There’s a brand new Pro Search reasoning mode selector, together with OpenAI o1, with transparent chain of thought into model’s reasoning. This means a subset of the model’s parameters is activated for every enter. They open-sourced numerous distilled fashions starting from 1.5 billion to 70 billion parameters. Smaller models can be utilized in environments like edge or mobile where there may be much less computing and reminiscence capability.

MIT researchers have developed Heterogeneous Pretrained Transformers (HPT), a novel mannequin structure impressed by massive language fashions, designed to train adaptable robots by using knowledge from multiple domains and modalities. MMLU is used to check for a number of tutorial and professional domains. Its goal is to democratize access to advanced AI research by offering open and environment friendly models for the academic and developer neighborhood. More oriented for tutorial and open research. A key open question will be the extent to which the standard of chains-of-thought becoming vital for enter datasets for these fashions - s1 relies off of refined chains of thought from Google Gemini, and DeepSeek is broadly thought to have educated in part on some chains of thought derived from OpenAI o1 mannequin. The group then distilled the reasoning patterns of the bigger mannequin into smaller models, leading to enhanced efficiency. There’s a brand new Pro Search reasoning mode selector, together with OpenAI o1, with transparent chain of thought into model’s reasoning. This means a subset of the model’s parameters is activated for every enter. They open-sourced numerous distilled fashions starting from 1.5 billion to 70 billion parameters. Smaller models can be utilized in environments like edge or mobile where there may be much less computing and reminiscence capability.

If you liked this posting and you would like to obtain extra info about Free DeepSeek r1 kindly check out our own web page.

- 이전글Prime 25 Quotes On Deepseek Ai 25.02.18

- 다음글The World's Worst Recommendation On Deepseek 25.02.18

댓글목록

등록된 댓글이 없습니다.